This is my M.Eng. master project. I built an AR panoramic video calling system that enhances an “immersive calling” experience: users can wear Rokid Air AR glasses and look around a remote environment in 360°, as if stepping into the other person’s room. 🤩

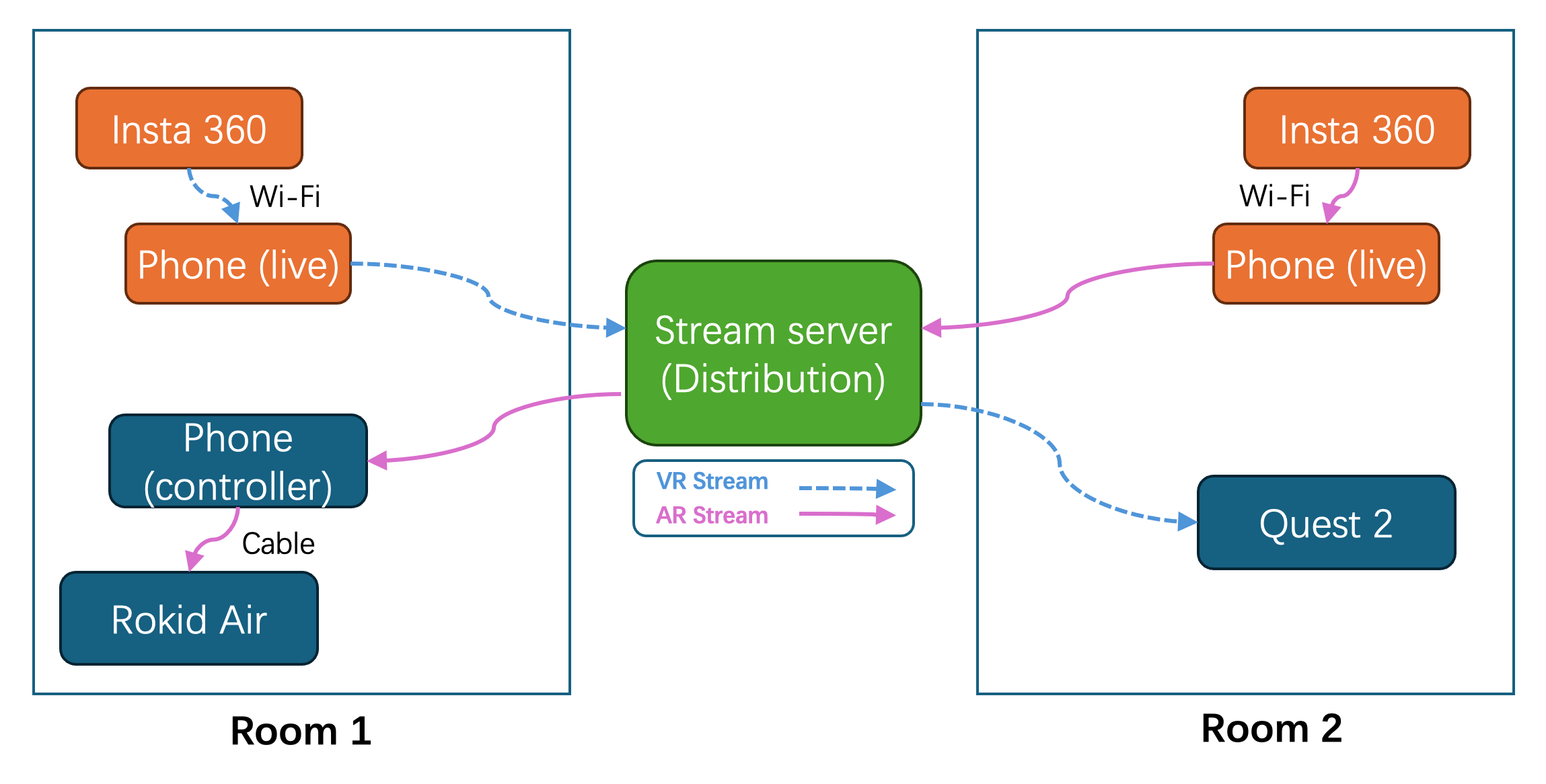

The system follows a modular capture → cloud distribution → playback workflow: an Insta360 X2 captures the scene and pushes the stream to the cloud, a cloud streaming platform transcodes/distributes it, and a Unity-based AR calling app renders the live panorama on Rokid with head-tracked viewing.

🧠 Software Development

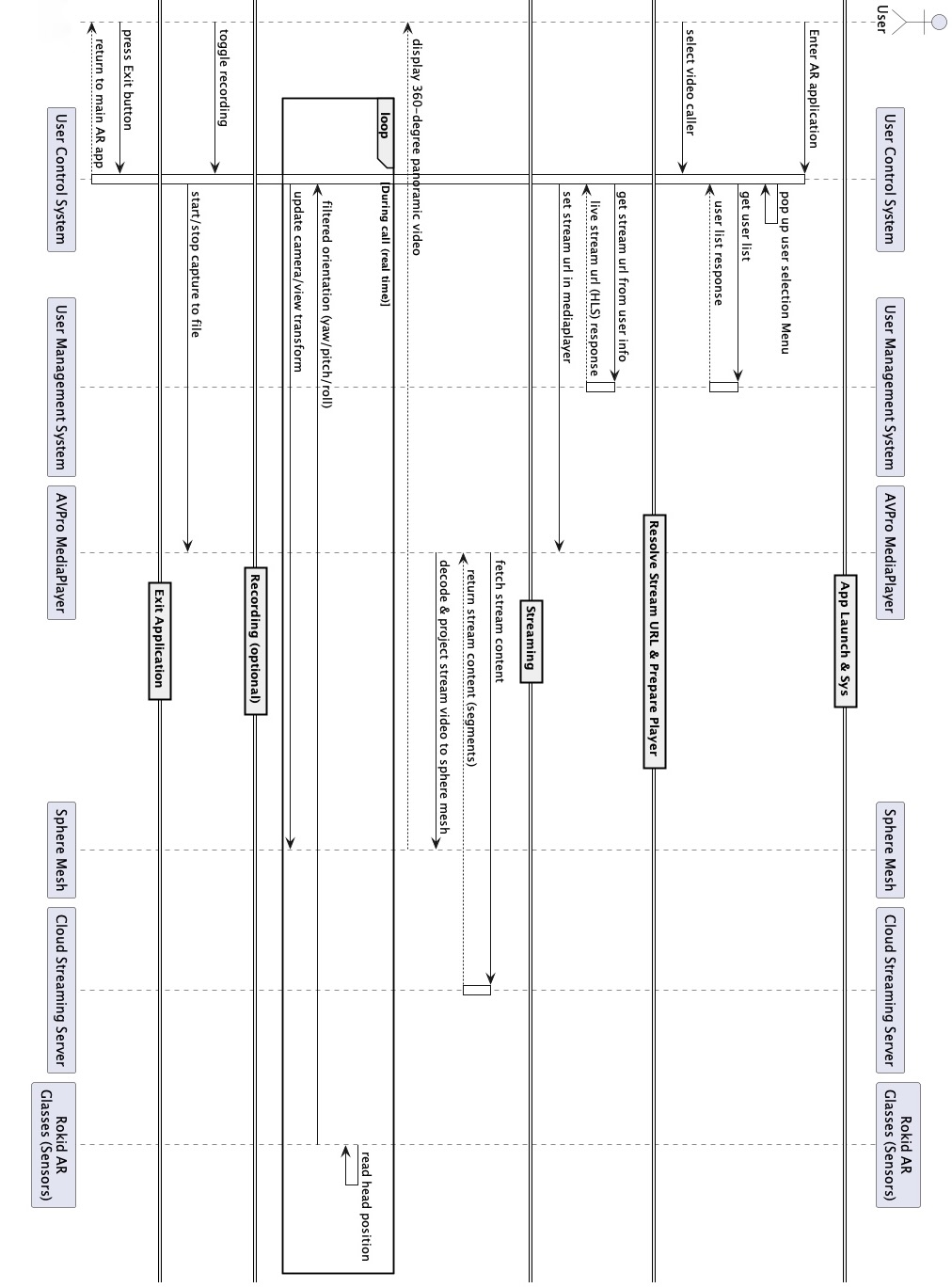

- Built the Rokid AR Calling App in Unity (Android) with Rokid UXR SDK integration for stereo rendering and 3DoF head tracking.

- Integrated AVPro Video as the live playback engine (HLS/M3U8), decoding the stream and mapping frames onto the inner surface of a sphere so the user stands inside a panoramic dome.

- Implemented contact management with JSON-based storage.

- Optimized call startup with a preloading strategy to avoid long waits/black screens by instantiating key resources (panorama materials/UI/plugin interfaces) in advance.

⚙️ System & Cloud Design

- Used a cloud live streaming platform (VolcEngine) to ingest, transcode, and distribute the panoramic stream. The camera pushes via RTMP, while clients pull via HLS (M3U8) for broad compatibility.

- Configured push/pull domains and DNS CNAME records (e.g.,

push.example.com,pull.example.com), enabling stable publishing and playback URLs for the AR app. - Leveraged CDN routing/edge selection so the client can fetch segments from an optimal node, improving stability under variable network conditions.

💡 Project Highlights

- Immersive rendering path: MediaPlayer decodes the live stream → sphere mapping creates a full 360° environment, removing the “flat video window” feeling.

- Fast call establishment & stability validation: after tapping “Call”, connection typically completes in ~3 to 5 seconds while playback stayed smooth without obvious stutter.

- Cross-device extensibility: the modular capture–cloud–playback workflow is designed to be reusable across AR and VR devices (e.g., AR - VR, AR - AR, VR - VR).

📸 Project Gallery

Workflow of the Panoramic Calling System

User wearing Rokid Air glasses during an AR Calling session

UML of the AR Application

🎥 Demo Video

AR Panoramic Calling Demo

🧪 Results & Limitations

- Latency tradeoff (HLS): an inherent ~2 to 5s delay is expected; and cross-region routes can further increase delay.

- Device constraints: Rokid Air relies on a phone for compute; long sessions (~20 min) can cause heat and performance dips, with frame rate typically 15–30 fps and occasional stutter during fast motion.

This project demonstrates an end-to-end XR pipeline designed for a stronger sense of remote presence than traditional video calls.